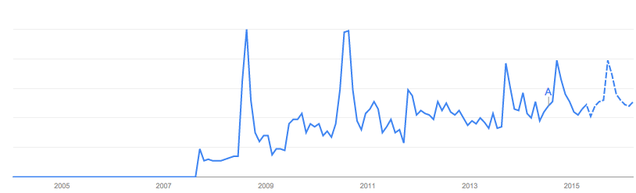

We live in a time where auto theft is incredibly impractical. Criminals in 2015 struggle to figure out how to get past electronic security and alarm systems, reflecting an over 90% drop in NYC auto theft since the early 90’s. These days, even a successfully stolen vehicle can be recovered with GPS tracking and incidences of theft are often caught on video.

It might seem like convenience is weakness but since car theft is way down, this might not hold true at the moment. The security holes that seem most vulnerable to exploitation revolve around a key fob. Fobs are those small black electronic keys that everyone uses to unlock their car these days. They work by using A pre-determined electronic signal that must be authenticated by the CAN system. If the authentication checks out, the doors unlock. In newer cars, the engine will start via push button if the fob is in the immediate vicinity of the car so the driver doesn’t have to fish them out of her pocket.

Etymology of the word fob: Written evidence of the word's usage has been traced to 1888. Almost no one uses a pocket watch these days but a fob was originally an ornament attached to a pocket watch chain. The word hung around as an ocassional, outdated way to refer to key chains. In the 80's, the consumer market was introduced to devices that allowed a car to be unlocked or started remotely. The small electronic device was easily attached to the conventional set of carkeys, and within a few years the term fob key was generally used to describe any electronic key entry system that stored a code in a device, including hotel keycards as well as the remote car unlocking device usually described by the word.

Let’s take a look at three ways a fob key can be hacked.

Recording FOB signals for replay. This is one of those urban legends that’s been around since at least 2008. The story goes: thieves record the key fob signal and can later replay it with a dummy fob. The car can’t tell the difference and unlocks/starts as if the correct key fob has been used. It’s easy for the thief to control the schedule and catch the victim unawares because it doesn’t have to interact with the fob in real time. Sounds like the most effective way to hack a key fob, right? Problem is, each signal is unique, created with an algorithm than includes time. If the devices are not synchronized the fob can’t open the lock. A recorded signal played back wouldn’t open the lock. The conventional wisdom is that the devices, proprietary knowledge and experience needed to make this method work are not worth a stolen car’s worth of risk. Secrets leak but honestly, a team organized enough to steal a car this way would be able to use the same skills to make a lot more money legally. Lastly, if you could reverse engineer and record fob signals the FBI would already be watching you. The demographic that used to steal cars in the 90’s were largely not like the fast and furious franchise. The idea that a huge tech security op could be thwarted isn’t necessarily far fetched but there are no recorded cases. Not one. For that to change, someone needs to figure out how the sync code is incorporated into the algorithm and apparently no one has.

Amplifying FOB signal to trigger auto unlock feature. Not only is this method genius but it is rumored to be already in use. Eyewitnesses claim to have seen this in use and it sparked theories about the methodology. Unlike recording a signal, amplification is a lot cheaper and requires almost no proprietary knowledge of the code to pull off. It works like this: A device picks up a range of frequencies that the key fob is giving off and increases the range. Some cars feature the ability to sense the authentic key fob in a five foot range and auto-unlock or autostart their ignitions. With a signal amp, the engine can theoretically be started if the real key fob is within 30 feet. So, the keys can be on your nightstand but the car thinks you are at the car door. The thief can then open the door, sit in the drivers seat and the ignition can be pushbutton triggered as if the key fob was in the car with the thief. I thought about repeating some of the anecdotes I found online about this method but none of them are confirmed. No one has tested it but it looks like a signal booster can be bought online for pretty cheap if you know what to buy($17 – $300). Last week, NYT ran a piece about signal boosting. You can read that here.

Random signal generator. So unique frequency codes means you can’t record the signal and reuse it without a proprietary algorithm but signal amplification might not work on some systems in the near future. The rumors of it working successfully already have car companies working on a sensitive enough receiver that it would be sensitive to distortion and interference caused by the amp. But there are exceptions, where the signal is not random, such as a service codes. Manufacturers have overriding unlock codes and reset devices to assist with lost key fobs and maintenance/emergency cases. When these codes are leaked, they often open up a brief but large hole in security, during which thousands of cars can be swiped. The main reason it isn’t happening already is more about organized crime not being organized enough to plan and exploit that security hole. Or, you know, maybe the codes just haven’t leaked yet.

Hardware construction.

Constructing the hardware components needed takes specialized knowledge of hardware. Searching for information about this stuff if bound to attract NSA attention when followed by parts being ordered. The kind of guy who likes to sit in a workshop ordering parts and tinkering all day isn’t always the one who wants to go out and take risks with newer, higher-end cars. That is the kind of multifaceted thief NYC was famous for back before the numbers plunged in the 90’s but the hardware is becoming more and more esoteric. People are not as apt to work on devices that have such small parts on projects that run with such high risk. For that reason, there is more money to be made in producing a bunch of low-cost black market devices that are already calibrated and tested to work. Buying this device on the street and using it before selling it off again might leave a smaller trail than building it in a sketchy apartment-turned-lab that is sure to be searched if a heist goes wrong.

Constructing the hardware components needed takes specialized knowledge of hardware. Searching for information about this stuff if bound to attract NSA attention when followed by parts being ordered. The kind of guy who likes to sit in a workshop ordering parts and tinkering all day isn’t always the one who wants to go out and take risks with newer, higher-end cars. That is the kind of multifaceted thief NYC was famous for back before the numbers plunged in the 90’s but the hardware is becoming more and more esoteric. People are not as apt to work on devices that have such small parts on projects that run with such high risk. For that reason, there is more money to be made in producing a bunch of low-cost black market devices that are already calibrated and tested to work. Buying this device on the street and using it before selling it off again might leave a smaller trail than building it in a sketchy apartment-turned-lab that is sure to be searched if a heist goes wrong.

Paper trail & identity theft.

Technology has made it really difficult to even take the car int he first place but once you have a stolen car they are almost impossible to get rid of these days. There can be multiple tracking devices and serial number locations in one car and if the operation isn’t extremely current, the likelihood of the car being found in red hands goes up quickly.

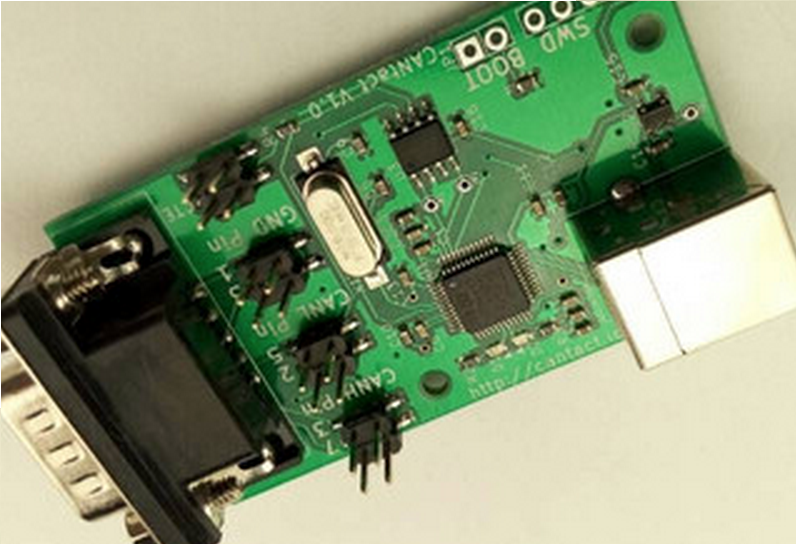

Once the car is stolen, a tech-savvy thief would need special equipment to access the on-board computer and do things like disable the GPS system, take any additional tracking system offline, and disable tech support from manipulating the vehicle’s electronics. Equipment to hack the car’s CAN system has been expensive and shrouded in mystery for the last couple decades but in recent days the internet has united hackers and security researchers to create custom hardware like CANtact Device Lets you Hack a Car’s CPU for $60.

|

Jonathan Howard

Jonathan is a freelance writer living in Brooklyn, NY |