Quantum Computer prototypes make mistakes. It’s in their nature. Can redundancy correct them?

Quantum memory promises speed combined with energy efficiency. If made viable it will be used in phones, laptops and other devices and give us all faster, more trustworthy tech which will require less power to operate. Before we see it applied, the hardware requires redundant memory cells to check and double-check it’s own errors.

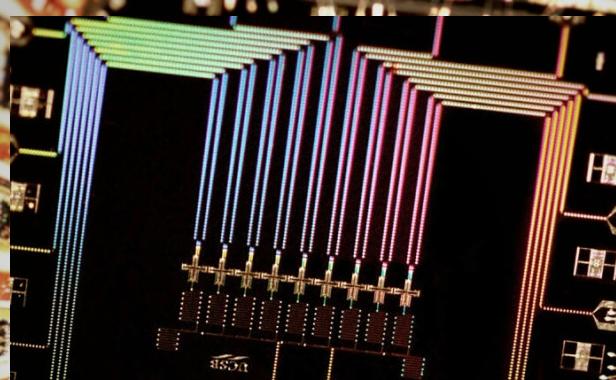

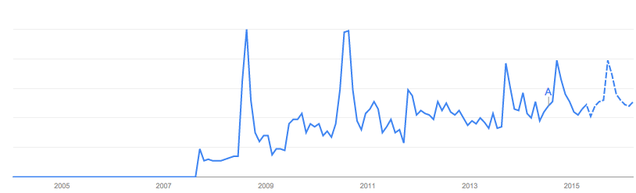

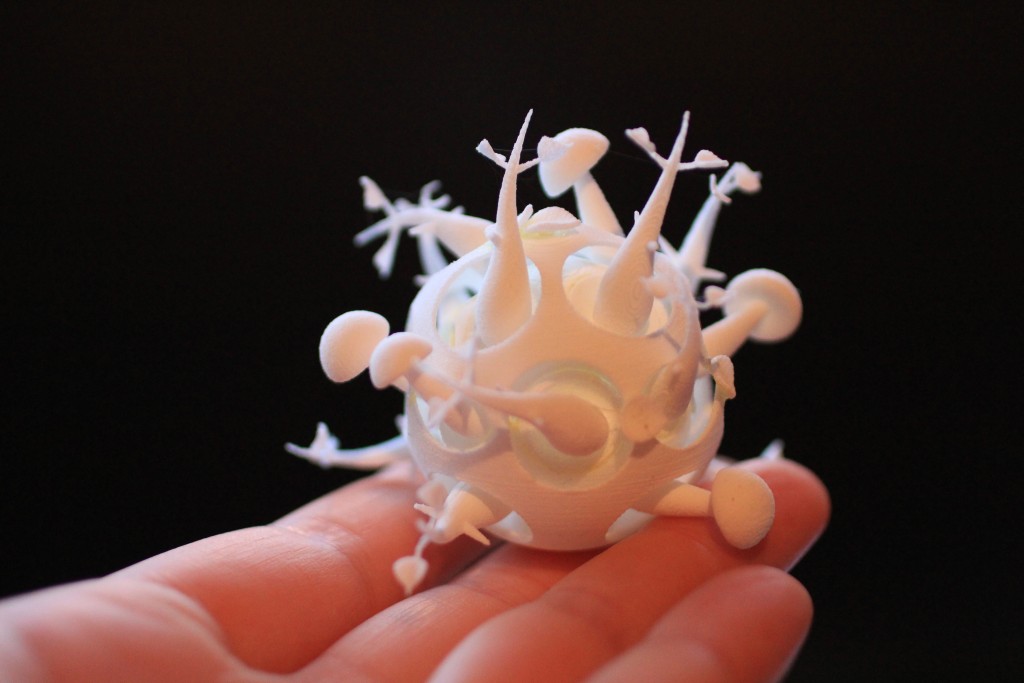

All indications show quantum tech is poised to usher the next round of truly revolutionary devices but first, scientists must solve the problem of the memory cells saving the wrong answer. Quantum physicists must redesign circuitry that exploits quantum behavior. The current memory cell is called a Qubit. The Qubit takes advantage of quantum mechanics to transfer data at an almost instantaneous rate, but the data is sometimes corrupted with errors. The Qubit is vulnerable to errors because it is physically sensitive to small changes in the environment it physically exists in. It’s been difficult to solve this problem because it is a hardware issue, not a software design issue. UC Santa Barbara’s physics professor John Martinis’ lab is dedicated to finding a workaround that can move forward without tackling the actual errors. They are working on a self-correcting Qubit.

The latest design they’ve developed at Martinis’ Lab is quantum circuitry that repeatedly self-checks for errors and suppresses the statistical mistake. Saving data to mutliple Qubits and empowering the overall system with that kind of desirable reliability we’ve come to expect from non-quantum digital computers. Since an error-free Qubit seemed last week to be a difficult hurdle, this new breakthrough seems to mean we are amazingly close to a far-reaching breakthrough.

Julian Kelly is a grad student and co-lead author published in Nature Journal:

“One of the biggest challenges in quantum computing is that qubits are inherently faulty so if you store some information in them, they’ll forget it.”

Bit flipping is the problem dejour in smaller, faster computers.

Last week I wrote about a hardware design problem called bit flipping, where a classic, non-quantum computer has this same problem of unreliable data. In effort to make a smaller DRAM chip, designers created an environment where the field around one bit storage location could be strong enough to actually change the value of the bit storage location next to it. You can read about that design flaw and the hackers who proved it could be exploited to gain system admin privileges in otherwise secure servers, here.

Bit flipping also applies to this issue in quantum computing. Quantum computers don’t just save information in binary(“yes/no”, or “true/false”) positions. Qubits can be in any or even all positions at once, because they are storing value in multiple dimensions. It’s called “superpositioning,” and it’s the very reason why quantum computers have the kind of computational prowess they do, but ironically this characteristic also makes Qubits prone to bit flipping. Just being around atoms and energy transference is enough to create unstable environments and thus unreliable for data storage.

“It’s hard to process information if it disappears.” ~ Julian Kelly.

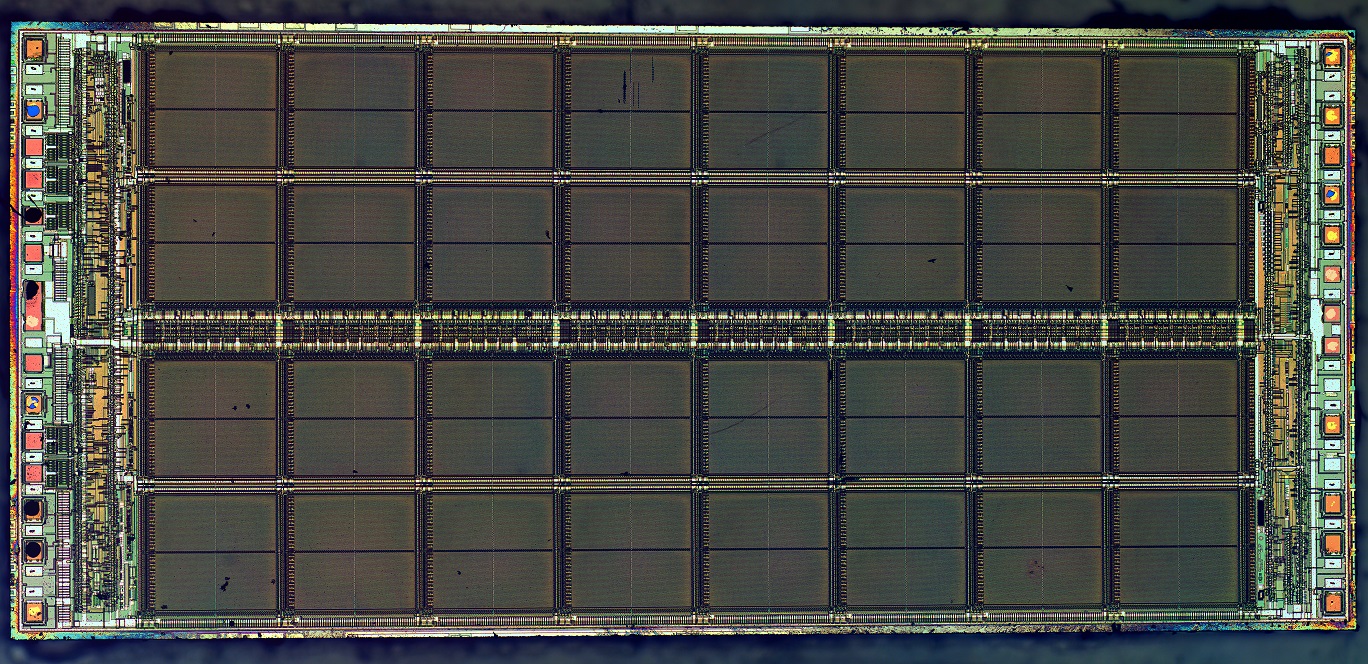

Along with Rami Barends, staff scientist Austin Fowler and others in the Martinis Group, Julian Kelly is making a data storage scheme where several qubits work in conjunction to redundantly preserve information. Information is stored across several qubits in a chip that is hard-wired to also check of the odd-man-out error. So, while each Qubit is unreliable, the chip itself can be trusted to store data for longer and with less, hopefully, no errors.

It isn’t a new idea but this is the first time it’s been applied. The device they designed is small, in terms of data storage, but it works as designed. It corrects its own errors. The vision we all have of a working quantum computer able to process a sick amount of data in an impressively short time? That will require something in the neighborhood of a hundred million Qubits and each of the Qubits will be redundantly self-checking to prevent errors.

Austin Fowler spoke to Phys.org about the firmware embedded in this new quantum error detection system, calling it surface code. It relies on the measurement of change between a duplication and the original bit, as opposed to simlpy comparing a copy of the same info. This measurement of change instead of comparison of duplicates is called parity recognition, and it is unique to quantum data storage. The original info being preserved in the Qubits is actually unobserved, which is a key aspect of quantum data.

“You can’t measure a quantum state, and expect it to still be quantum,” explained Barends.

As in any discussion of quantum physics, the act of observation has the power to change the value of the bit. In order to truly duplicate the data the way classical computing does in error detection, the bit would have to be examined, which in and of itself would potentially cause a bitflip, corrupting the original bit. The device developed at Martini’s U of C Santa Barbara lab

This project is a groundbreaking way of applying physical and theoretical quantum computing because it is using the phsycial Qubit chip and a logic circuit that applies quantum theory as an algorithm. The results being a viable way of storing data prove that several otherwise untested quantum theories are real and not just logically sound. Ideas in quantum theory that have been pondered for decades are now proven to work in the real world!

What happens next?

Phase flips:

Martinis Lab will be continuing it’s tests in effort to refine and develop this approach. While the bit flip errors seemed to have been solved with this new design, there is a new type of error not found in classical computing that has yet to be solved: the phase-flip. Phase-flips might be a whole other article and until Quantum physicists solve them there is no rush for the layman to understand.

Stress tests:

The team is also currently running the error correction cycle for longer and longer periods while monitoring the devices integrity and behavior to see what will happen. Suffice to say, there are a few more types of errors than it may appear, despite this breakthrough.

Corporate sponsorship:

As if there was any doubt about funding…. Google has approached Martinis Lab and offered them support in effort to speed up the day when quantum computers stomp into the mainstream.

|

Jonathan Howard

Jonathan is a freelance writer living in Brooklyn, NY |