Can an image, sound, video or string of words influence the human mind so strongly the mind is actually harmed or controlled? Cosmoso takes a look at technology and the theoretical future of psychological warfare with Part Three of an ongoing series.

A lot of the responses I got to the first two installments talked about religion being weaponized memes. People do fight and kill on behalf of their religions and memes play a large part in disseminating the message and information religions have to offer.

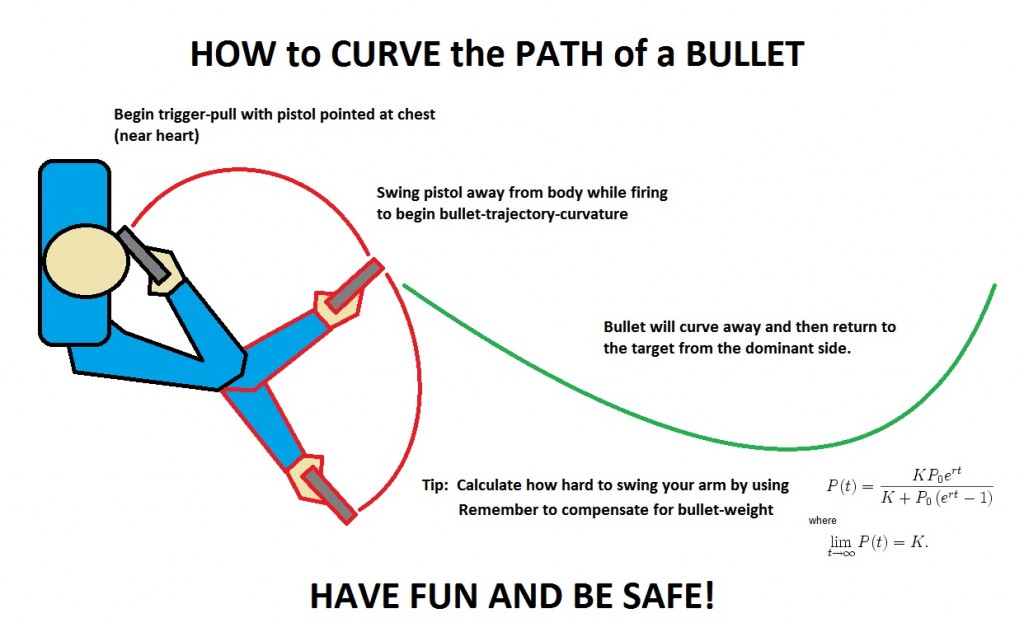

Curved bullet meme is a great one. Most of the comments I see associated with this image have to do with how dumb someone would have to be to believe it would work. Some people have an intuitive understanding of spacial relations. Some might have a level of education in physics or basic gun safety and feel alarm bells going off way before they’d try something this dumb. It’s a pretty dangerous idea to put out there, though, because a percentage of people the image reaches could try something stupid. Is it a viable memetic weapon? Possibly~! I present to you, the curved bullet meme.

The dangers here should be obvious. The move starts with “begin trigger-pull with pistol pointed at chest (near heart)” and anyone who is taking it seriously beyond is Darwin Award material.

Whoever created this image has no intention of someone actually trying it. So, in order for someone to fall for this pretty obvious trick, they’d have to be pretty dumb. There is another way people fall for tricks, though.

There is more than one way to end up being a victim of a mindfuck and being ignorant is part of a lot of them but ignorance can actually be induced. In the case of religion, there are several giant pieces of information or ways of thinking that must be gotten all wrong before someone would have to believe that the earth is coming to an end in 2012, or the creator of the universe wants you to burn in hell for eternity for not following the rules. By trash talking religion in general, I’ve made a percentage of readers right now angry, and that’s the point. Even if you take all the other criticisms about religion out of the mix, we can all agree that religion puts its believers in the position of becoming upset or outraged by very simple graphics or text. As a non-believer, a lot of the things religious people say sound as silly to me as the curved bullet graphic seems to a well-trained marksman.

To oversimplify it further: religions are elaborate, bad advice. You can inoculate yourself against that kind of meme but the vast majority of people out there cling desperately, violently to some kind of doctrine that claims to answer one or more of the most unanswerable parts of life. When people feel relief wash over them, they are more easily duped into doing what it takes to keep their access to that feeling.

There are tons of non-religious little memes out there that simply mess with anyone who follows bad advice. It can be a prank but the pranks can get pretty destructive. Check out this image from the movie Fight Club:

Thinking no one fell for this one? For one thing, it’s from a movie, and in the movie it was supposed to be a mean-spirited prank that maybe some people fell for. Go ahead and google “fertilize used motor oil”, though, and see how many people are out there asking questions about it. It may blow your mind…

|

Jonathan Howard

Jonathan is a freelance writer living in Brooklyn, NY |

A rubix cube is particularly compelling as a multi-dimensional teaching tool, because it puts spacial dimensions in the abstract in the first place, and then gives the cube the ability to change the dimensional orientation of a third of it’s mass. It’s hard to wrap your head around a normal three dimensional rubix puzzle. By adding another dimension and using the same principle, one can ALMOST imagine that fourth spacial dimension. Most people can’t solve a three dimensional Rubix puzzle but if you think you are ready for the fourth dimension, you can download it and play it on your two dimensional screen, here:

A rubix cube is particularly compelling as a multi-dimensional teaching tool, because it puts spacial dimensions in the abstract in the first place, and then gives the cube the ability to change the dimensional orientation of a third of it’s mass. It’s hard to wrap your head around a normal three dimensional rubix puzzle. By adding another dimension and using the same principle, one can ALMOST imagine that fourth spacial dimension. Most people can’t solve a three dimensional Rubix puzzle but if you think you are ready for the fourth dimension, you can download it and play it on your two dimensional screen, here: