A recent study suggested a causal association between smoking tobacco and developing psychosis or schizophrenia, building on research about the relationship between the use of substances and the risk of psychosis. While cannabis is one of the usual suspects, a potential link with tobacco will have come as a surprise to many.

The report was based on a review of 61 observational studies and began with the hypothesis that if tobacco smoking played a part in increasing psychosis risk, rather than being used to deal with symptoms that were already there, people would have higher rates of smoking at the start of their illness. It also posited that smokers have a higher risk of developing psychosis and an earlier onset of symptoms to non-smokers. They found that more than half of people with a first episode of schizophrenia were already smokers, three times higher than that of a control group.

However, one of the limitations of the study, as the authors admit, is that many of the studies in their review did not control for the consumption of substances other than tobacco, such as cannabis. As many people combine tobacco with cannabis when they smoke a joint, the extent to which tobacco is the risk factor is still unclear.

One clear message the research highlighted was the high level of smoking among those with mental health problems and that smoking is not necessarily simply something that alleviates symptoms – the so-called “self-medication hypothesis”.

Almost half of all cigarettes

The figure is stark: 42% of all cigarettes smoked in England are consumed by people with mental health problems. So while the life expectancy of the general population continues to climb, those with a severe mental health problem have their lives cut short by up to 30 years – in part due to smoking.

Since the 1950s, rates of smoking have dramatically reduced in the population while the number of people with psychosis has remained constant. So why has the incidence of psychosis not mirrored the reduction in the overall numbers of smokers? Two factors might explain this. First institutional neglect has held up efforts and resources employed to reduce smoking for people with mental health problems – until recently public health campaigns have ignored this group with justifications that “surely they have enough to worry about without nagging about smoking” or “it’s one of the few pleasures they have”.

A more sinister role is also played by the tobacco industry, who have not been passive or unaware of one of their most loyal consumer groups: people with mental health problems. The industry has been active in funding research that supports the self-medication hypothesis, pushing the idea that people with psychosis need tobacco to relieve their symptoms, rather than tobacco having any link to those symptoms. The industry has also been a key player in obstructing hospital smoking bans which they perceive as a threat to tobacco consumption. Worse still they have marketed cigarettes specifically to people with mental health problems.

Combining substances

Smoking by Shutterstock

People with psychosis use substances for the same reasons you and I do: relax or feel less stressed. And the good news is, counter to many people’s preconceptions, individuals with mental health problems are no different to anyone else in their desire and ability to quit smoking.

This is welcome given the clear links between smoking and physical health. But there are particular issues when it comes to smoking and those with psychosis. For example, smoking impacts on the medical treatment of psychosis, as tobacco is known to interact with Clozapine, one of the drugs used to treat the condition. Because smoking interferes with the therapeutic action of Clozapine and some other anti-psychotics, requiring higher doses of the drug.

Then there’s the cannabis question. People with mental health problems are more likely to use drugs such as cannabis. This is usually combined with tobacco when smoked in a joint. So initiation into cannabis and its continued use contributes to higher rates of tobacco dependence for people with mental health problems.

The relationship between cannabis and psychosis has preoccupied researchers, policy makers and clinicians for decades. Unfortunately most of this evidence which has influenced and underpinned public health messages about cannabis is either outdated or methodologically flawed.

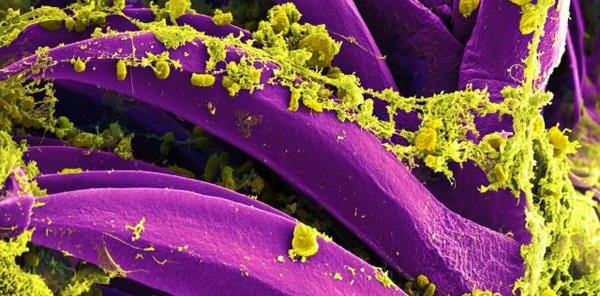

Since many of the seminal studies on this issue were carried out, there has been a marked change in the type of cannabis that is available. These studies were recruiting and investigating users who were exposed to lower potency varieties of cannabis. Over the past decade, higher potency forms of cannabis such as “skunk” have become dominant on the streets. This has been compounded by research being done by simply enquiring whether research participants currently use or have ever used cannabis. This assumes cannabis is a single type of drug, rather than a range of substances with varying strengths and constituent ingredients. To make matters worse, we rely on proxy measures of cannabis potency drawn from seizures made by the police. Such seizures may not be representative of contemporary cannabis availability.

Many people are exposed to a combination of drugs, whether prescribed, recreational or a mix of both. This raises the potential for interactions, where the effect of one drug alters the effects of another. This raises a further possibility in the smoking, cannabis and psychosis story. Could some people’s psychosis be attributed to an interaction between cannabis and tobacco? Information about drug interactions is scarce and pharmaceutical research has routinely excluded people who use substances from drug trials. This does not reflect reality as many people will combine medication with recreational drug use.

All of these factors serve as a useful reminder how little we know about the causes of psychosis, the role drugs play and the many vested interests that direct the route we take in trying to understand how we can prevent or treat people who are affected by mental health problems.

![]()

Ian Hamilton is Lecturer in Mental Health at University of York.

This article was originally published on The Conversation.

Read the original article.