Jon Major, University of Liverpool

Scientists have just discovered massive amounts of a rare metal called tellurium, a key element in cutting-edge solar technology. As a solar expert who specialises in exactly this, I should be delighted. But here’s the catch: the deposit is found at the bottom of the sea, in an undisturbed part of the ocean. ![]()

People often have an idealised view of solar as the perfect clean energy source. Direct conversion of sunlight to electricity, no emissions, no oil spills or contamination, perfectly clean. This however overlooks the messy reality of how solar panels are produced.

While the energy produced is indeed clean, some of the materials required to generate that power are toxic or rare. In the case of one particular technology, cadmium telluride-based solar cells, the cadmium is toxic and the telluride is hard to find.

Cadmium telluride is one of the second generation “thin-film” solar cell technologies. It’s far better at absorbing light than silicon, on which most solar power is currently based, and as a result its absorbing layer doesn’t need to be as thick. A layer of cadmium telluride just one thousandth of a millimetre thick will absorb around 90% of the light that hits it. It’s cheap and quick to set up, compared to silicon, and uses less material.

As a result, it’s the first thin-film technology to effectively make the leap from the research laboratory to mass production. Cadmium telluride solar modules now account for around 5% of global installations and, depending on how you do the sums, can produce lower cost power than silicon solar.

Sarah Swenty/USFWS, CC BY

But cadmium telluride’s Achilles heel is the tellurium itself, one of the rarest metals in the Earth’s crust. Serious questions must be asked about whether technology based on such a rare metal is worth pursuing on a massive scale.

There has always been a divide in opinion about this. The abundancy data for tellurium suggests a real issue, but the counter argument is that no-one has been actively looking for new reserves of the material. After all, platinum and gold are similarly rare but demand for jewellery and catalytic converters (the primary use of platinum) means in practice we are able to find plenty.

The discovery of a massive new tellurium deposit in an underwater mountain in the Atlantic Ocean certainly supports the “it will turn up eventually” theory. And this is a particularly rich ore, according to the British scientists involved in the MarineE-Tech project which found it. While most tellurium is extracted as a by-product of copper mining and so is relatively low yield, their seabed samples contain concentrations 50,000 times higher than on land.

Google Earth

Extracting any of this will be formidably hard and very risky for the environment. The top of the mountain where the tellurium has been discovered is still a kilometre below the waves, and the nearest land is hundreds of miles away.

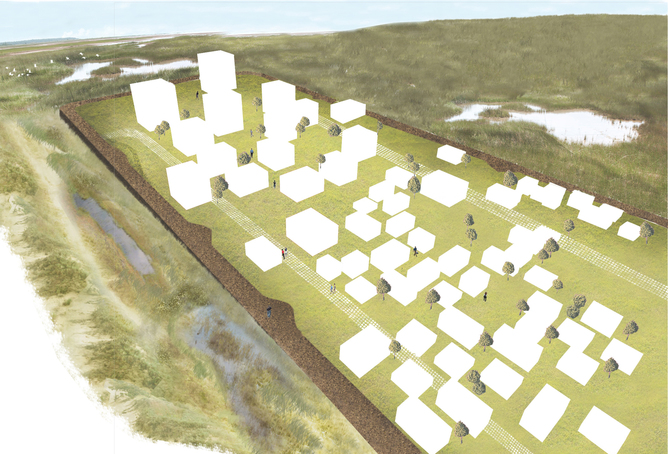

Even on dry land, mining is never a good thing for the environment. It can uproot communities, decimate forests and leave huge scars on the landscape. It often leads to groundwater contamination, despite whatever safeguards are put in place.

And on the seabed? Given the technical challenges and the pristine ecosystems involved, I think most people can intuitively guess at the type of devastation that deep-sea mining could cause. No wonder it has yet to be implemented anywhere yet, despite plans off the coast of Papua New Guinea and elsewhere. Indeed, there’s no suggestion that tellurium mining is liable to occur at this latest site any time soon.

Is deep sea mining worth the risk?

However the mere presence of such resources, or the wind turbines or electric car batteries that rely on scarce materials or risky industrial processes, raises an interesting question. These are useful low-carbon technologies, but do they also have a requirement to be environmentally ethical?

There is often the perception that everyone working in renewable energy is a lovely tree-hugging, sandal-wearing leftie, but this isn’t the case. After all, this is now a huge industry, one that is aiming to eventually supplant fossil fuels, and there are valid concerns over whether such expansion will be accompanied by a softening of regulations.

We know that solar power is ultimately a good thing, but do the ends always justify the means? Or, to put it more starkly: could we tolerate mass production of solar panels if it necessitated mining and drilling on a similar scale to the fossil fuels industry, along with the associated pitfalls?

Peter Gudella / shutterstock

To my mind the answer is undoubtedly yes, we have little choice. After all, mass solar would still wipe out our carbon emissions, helping curb global warming and the associated apocalypse.

What’s reassuring is that, even as solar becomes a truly mature industry, it has started from a more noble and environmentally sound place. Cadmium telluride modules for example include a cost to cover recycling, while scarce resources such as tellurium can be recovered from panels at the end of their 20-year or more lifespan (compare this with fossil fuels, where the materials that produce the power are irreparably lost in a bright flame and a cloud of carbon).

The impact of mining for solar panels will likely be minimal in comparison to the oil or coal industries, but it will not be zero. As renewable technology becomes more crucial, we perhaps need to start calibrating our expectations to account for this.

At some point mining operations in search of solar or wind materials will cause damage or else some industrial production process will go awry and cause contamination. This may be the Faustian pact we have to accept, as the established alternatives are far worse. Unfortunately nothing is perfect.

Jon Major, Research Fellow, Stephenson Institute for Renewable Energy, University of Liverpool