The overwhelming scientific consensus is that gases produced by human activity are affecting the global climate. But even if you don’t believe the current warming of the global climate is caused by humans, it’s only common sense that cutting back on human production of heat-trapping gases may help reverse the disturbing recent upward trend in global temperatures.

While politicians attempt to change reality by voting on facts, scientists like me will move forward as best we can to find solutions to the overwhelming problem of climate change.

Agriculture is an often overlooked source of human-produced greenhouse gases. Currently responsible for 10%-12% of global anthropogenic greenhouse gas emissions, it’s a realm ripe for an emissions reduction overhaul. But with the rising global human population, how can farmers increase food production to meet demands while simultaneously cutting back on greenhouse gas emissions? Current research targets ways to mitigate greenhouse gas production while maintaining agricultural productivity, via strategies such as changing how plants are left on fields in the fall, tweaking how much and when fertilizers are added to fields, and adjusting what we feed livestock.

The insulators at issue

Greenhouse gases absorb infrared radiation – that is, heat. Once in the Earth’s atmosphere, they act as an insulating blanket, trapping the sun’s warmth. Carbon dioxide, the most famous greenhouse gas, is what you exhale with every breath. The main human activity that produces CO2 is the burning of fossil fuels.

Methane and nitrous oxide are also greenhouse gases, and both are produced on farms. Methane comes mainly from rice paddies, manure stockpiles and ruminant animals, such as cattle. Nitrous oxide traces back to nitrogen-containing fertilizers (including manure, commonly the fertilizer of choice for organic farms) added to soils. These gases are of particular concern since they have a greater heat-trapping ability than CO2, meaning that increasing levels of nitrous oxide and methane gases have a greater effect on atmospheric temperature.

Water vapor in the air can also trap heat and so act as a greenhouse gas. Water vapor levels depend on atmospheric temperature, which is in turn affected by levels of heat-trapping gases in the air. By reducing levels of other greenhouse gases in the air, we’ll also reduce the amount of heat-trapping water vapor produced via evaporation of surface water. This has implications for farms that use irrigation.

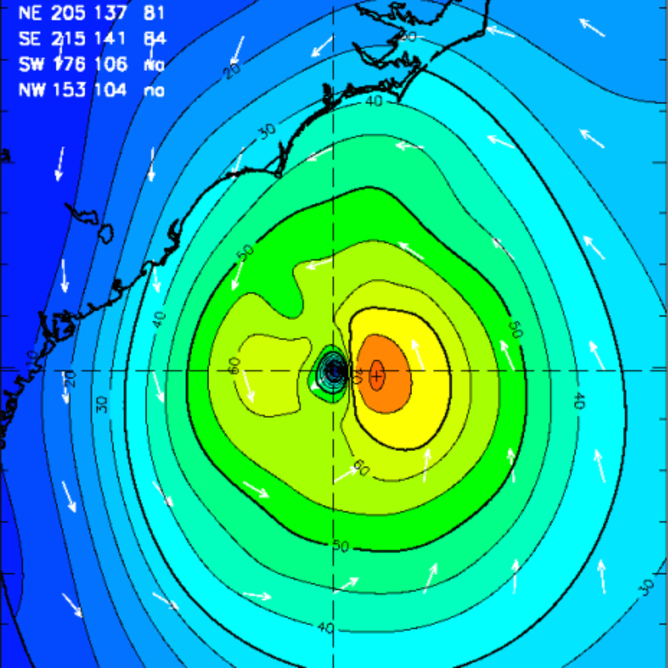

KoiQuestion, CC BY-SA

The natural nitrogen cycle on the farm

All plants need nitrogen, but the form that makes up the majority of the air isn’t readily accessible to them. They depend on the Earth’s nitrogen cycle to convert it to a usable form for them.

Providing nitrogen for their crops is one of the main reasons farmers apply fertilizer, whether organic or synthetic. A natural biological process called denitrification converts the nitrogen in fertilizer (in the form of nitrate or ammonium) to harmless nitrogen gas (N2, a major component in Earth’s atmosphere).

One of the steps in the process of denitrification is the production of nitrous oxide. To complete the denitrification process, bacteria that occur naturally in the soil convert nitrous oxide to N2. However, not every bacterium in the environment that produces nitrous oxide can also reduce it to N2 gas.

The sum of the activity of all denitrifying bacteria in the soil, along with soil chemistry and climatic factors, will affect the relative rates of nitrous oxide consumption or production by a patch of soil. When the amount of fertilizer added to a soil is more than can be taken up and used by plants, bursts of nitrous oxide are often produced. In climates where the ground freezes in winter, spring thaw is often accompanied by bursts of nitrous oxide production as well. These nitrous oxide bursts are an issue for the warming planet.

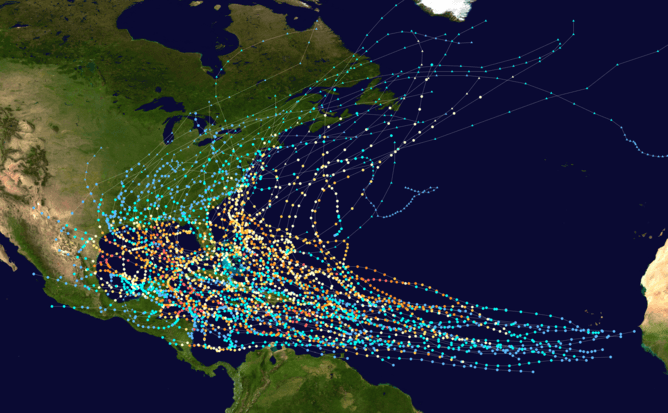

Tormod Sandtorv, CC BY-SA

Methane manufacture

In nature, microorganisms known as methanogens convert simple forms of carbon to methane (CH4). Methane production, or methanogenesis, happens in the absence of oxygen. So waterlogged soils (such as bogs or rice paddies) tend to produce more methane, as do methanogens that live in the rumen (stomach) of ruminant animals, such as cows and sheep.

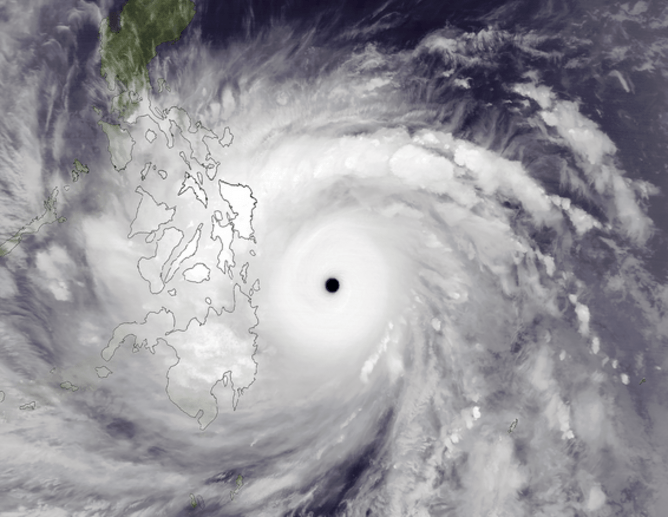

Robyn Hall, CC BY-NC

How to reduce greenhouse gases from farms

There are a number of ways to reduce greenhouse gas emissions from farms, but none is completely simple – the microbial ecosystems in soils and livestock rumens are complex environments, and the tools to study them thoroughly have only really been around for a few decades. But research is under way to tackle greenhouse gas emissions from agriculture, and it’s already clear there are several paths that can help.

We know that, in climates where the ground freezes, overwintering plants on the soil (that is, leaving plants intact on the soil surface after harvest instead of plowing them in or removing them in the fall) can help reduce nitrous oxide emissions. One theory to explain this effect is that the composition and activity of bacterial communities in the soil that produce nitrous oxide gas, or transform these into N2, are affected by soil temperature and nutrients added to the soil. The insulating effect of leaving the plants on the ground surface will affect soil temperature. And the plants contribute nutrients that are added to the soil as they slowly decay during periods above freezing. It’s a complicated system, to be certain, and work into this subject is ongoing.

Chafer Machinery, CC BY

We also know that limiting inputs of nitrogen to just the amount likely to be usable by plants can reduce emissions. This is easy in principle, of course, but actually guessing how much nitrogen a field full of plants needs to grow well and then supplying just enough to ensure good yields is in practice very difficult. Too little nitrogen and your plants may not grow as well as they should.

Low-input agriculture aims to reduce levels of applied products, including fertilizers, to fields. Along with best management practices, it should help reduce both greenhouse gas production and the environmental impact of farming.

Giving livestock different kinds of food can alter methane emissions from ruminant animals. For example, a greater amount of lipid (fat) in the diet can reduce emissions, but adding too much causes side effects for the animals’ ability to digest other nutrients. Adding nitrate can also reduce methane emissions, but too much nitrate is toxic. Researchers are currently working on modifying the composition of microorganism populations in the rumen to reduce methanogenesis – and thus emissions.

Additionally, it may be possible to add bacteria to soils or to manure digesters to reduce production of nitrous oxide, since certain denitrifying bacteria added to manured soil were able to cut nitrous oxide emissions in a recent study. The bacteria used in this study were naturally occurring organisms isolated from Japanese soils, and similar bacteria are found in soils all around the world.

One of the goals of the lab I work in is to find out how different agricultural management strategies affect the naturally occurring bacteria in the soil, and how these changes relate to levels of nitrous oxide that the soil produces. It may be that, in the future, farmers will use mixtures of bacteria to both promote plant growth and health and mitigate nitrous oxide emissions from the soils on their farms.

Let’s stop waiting

The world is a complicated place – the many different processes that make up global carbon and nitrogen cycles interact in complex ways that we’re still studying. In addition to other solutions engineers have devised that should help reduce emissions, we need to continue working on the agricultural piece of this puzzle.

![]()

Elizabeth Bent is Research Associate in Microbiology at University of Guelph.

This article was originally published on The Conversation.

Read the original article.