Antarctica is already feeling the heat of climate change, with rapid melting and retreat of glaciers over recent decades.

Ice mass loss from Antarctica and Greenland contributes about 20% to the current rate of global sea level rise. This ice loss is projected to increase over the coming century.

A recent article on The Conversation raised the concept of “climate tipping points”: thresholds in the climate system that, once breached, lead to substantial and irreversible change.

Such a climate tipping point may occur as a result of the increasingly rapid decline of the Antarctic ice sheets, leading to a rapid rise in sea levels. But what is this threshold? And when will we reach it?

What does the tipping point look like?

The Antarctic ice sheet is a large mass of ice, up to 4 km thick in some places, and is grounded on bedrock. Ice generally flows from the interior of the continent towards the margins, speeding up as it goes.

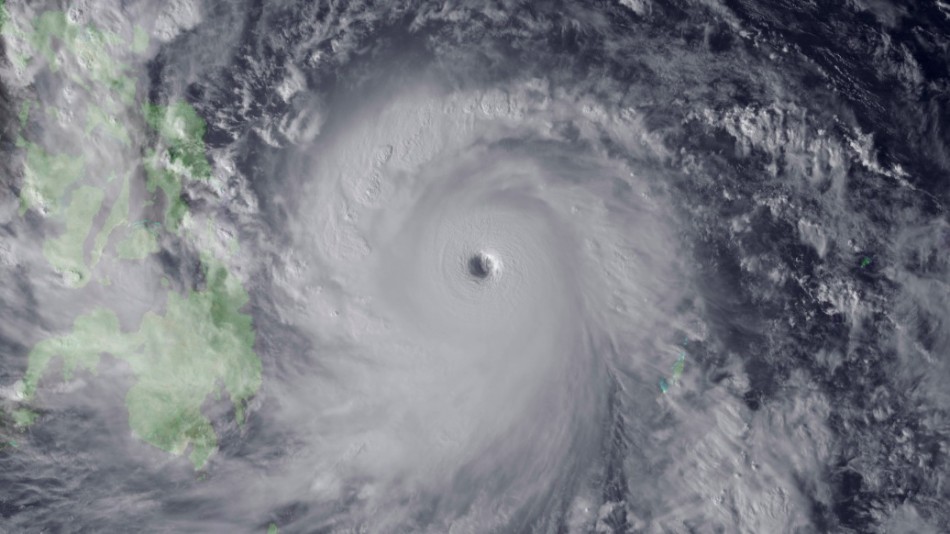

Where the ice sheet meets the ocean, large sections of connected ice – ice shelves – begin to float. These eventually melt from the base or calve off as icebergs. The whole sheet is replenished by accumulating snowfall.

David Gwyther

Floating ice shelves act like a cork in a wine bottle, slowing down the ice sheet as it flows towards the oceans. If ice shelves are removed from the system, the ice sheet will rapidly accelerate towards the ocean, bringing about further ice mass loss.

A tipping point occurs if too much of the ice shelf is lost. In some glaciers, this may spark irreversible retreat.

Where is the tipping point?

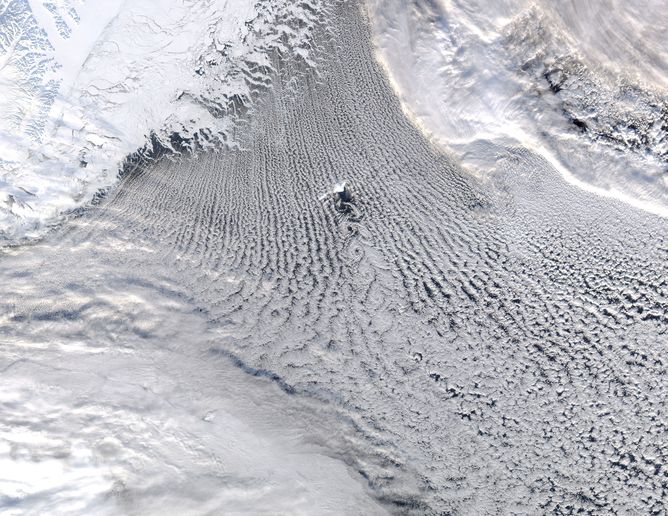

One way to identify a tipping point involves figuring out how much shelf ice Antarctica can lose, and from where, without changing the overall ice flow substantially.

A recent study found that 13.4% of Antarctic shelf ice – distributed regionally across the continent – does not play an active role in ice flow. But if this “safety band” were removed, it would result in significant acceleration of the ice sheet.

Esmee van Wijk/CSIRO

Antarctic ice shelves have been thinning at an overall rate of about 300 cubic km per year between 2003 and 2012 and are projected to thin even further over the 21st century. This thinning will move Antarctic ice shelves towards a tipping point, where irreversible collapse of the ice shelf and increase in sea levels may follow.

How do we predict when will it happen?

Some areas of West Antarctica may be already close to the tipping point. For example, ice shelves along the coast of the Amundsen and Bellingshausen Seas are the most rapidly thinning and have the smallest “safety bands” of all Antarctic ice shelves.

To predict when the “safety band” of ice might be lost, we need to project changes into the future. This requires better understanding of processes that remove ice from the ice sheet, such as melting at the base of ice shelves and iceberg calving.

Melting beneath ice shelves is the main source of Antarctic ice loss. It is driven by contact between warmer sea waters and the underside of ice shelves.

To figure out how much ice will be lost in the future requires knowledge of how quickly the oceans are warming, where these warmer waters will flow, and the role of the atmosphere in modulating these interactions. That’s a complex task that requires computer modelling.

Predicting how quickly ice shelves break up and form icebergs is less well understood and is currently one of the biggest uncertainties in future Antarctic mass loss. Much of the ice lost when icebergs calve occurs in the sporadic release of extremely large icebergs, which can be tens or even hundreds of kilometres across.

It is difficult to predict precisely when and how often large icebergs will break off. Models that can reproduce this behaviour are still being developed.

Scientists are actively researching these areas by developing models of ice sheets and oceans, as well as studying the processes that drive mass loss from Antarctica. These investigations need to combine long-term observations with models: model simulations can then be evaluated and improved, making the science stronger.

The link between ice sheets, oceans, sea ice and atmosphere is one of the least understood, but most important factors in Antarctica’s tipping point. Understanding it better will help us project how much sea levels will rise, and ultimately how we can adapt.

![]()

Felicity Graham, Ice Sheet Modeller, Antarctic Gateway Partnership, University of Tasmania; David Gwyther, Antarctic Coastal Ocean Modeller, University of Tasmania; Lenneke Jong, Cryosphere System Modeller, Antarctic Gateway Partnership & Antarctic Climate and Ecosystems CRC, University of Tasmania, and Sue Cook, Ice Shelf Glaciologist, Antarctic Climate and Ecosystems CRC, University of Tasmania

This article was originally published on The Conversation. Read the original article.