Every human is different. Some are outgoing, while others are reserved and shy. Some are focused and diligent, while others are haphazard and unfussed. Some people are curious, others avoid novelty and enjoy their rut.

This is reflected in our personality, which is typically measured across five factors, known as the “Big Five”. These are:

- Openness – intellectual curiosity and preference for novelty

- Conscientiousness – the degree of organisation and self-discipline

- Extraversion – sociability, emotional expression and tendency to seek others’ company

- Agreeableness – degree of trust or suspicious of others and tendencies towards helpfulness and altruism, and

- Neuroticism – emotional stability or volatility.

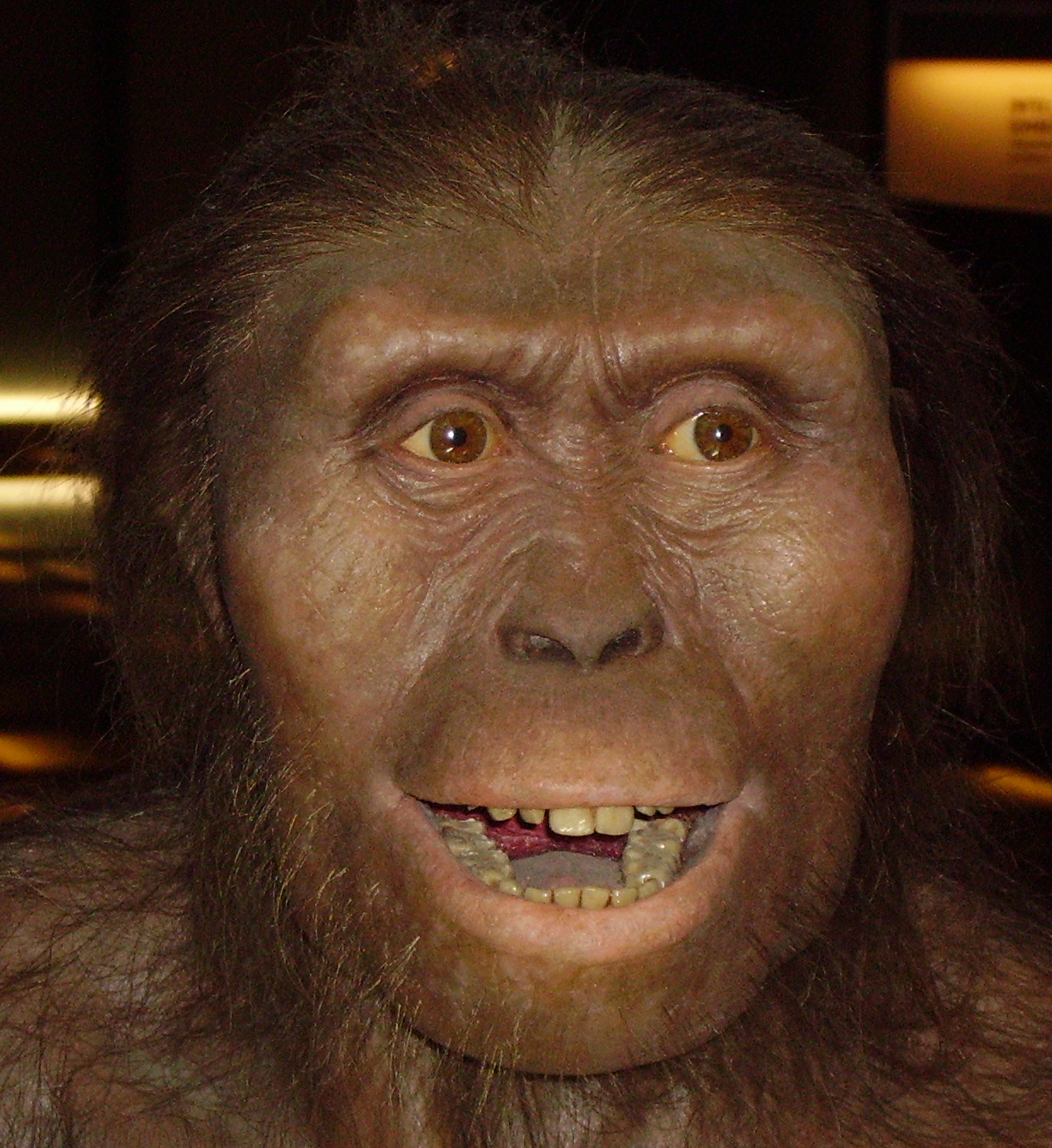

But did you know that our primate cousins – other apes (chimpanzees, bonobos, orangutans, gorillas and gibbons) and monkeys – also exhibit a similar personality profile? Some are bold, others shy. Some are friendly, other aggressive. Some are curious, while others are conservative.

But they also differ from us in some interesting ways. And it’s in teasing out these differences that we can learn a surprising amount about the way they live, and how they have evolved.

Social influence

Comparative psychologists have long adapted personality tests to measure the personality of other species, including pets, big cats, and our “hairy” primate relatives.

Since nonhuman animals cannot fill out a questionnaire, a human who knows them well – perhaps a caregiver, zookeeper, owner, researcher or park ranger – rates their personality for them.

Chimpanzees, it turns out, are remarkably similar to us in their personality make-up. They have been found to have the same five personality factors that we have. However, they also have a sixth Dominance factor. This includes features such as: independent, confident, fearless, intelligent, bullying and persistent.

Why do chimps have a Dominance factor and we don’t? It appears to be due to the kind of society that chimps live in. Understanding the dominance hierarchy of male chimpanzees – who is powerful and who is not – is a matter of survival and well-being for every chimpanzee in a community.

Other primates also show interesting variations in personality that correspond to their social dynamics.

Rod Waddington/Flickr, CC BY-SA

Macaque machinations

The 22 species of macaque monkeys are the only primates that are as widespread in their distribution as we are. Along with their disparate habitats, they also have a wide variation in the structure of the societies, which appears to have influenced the evolution of their personalities.

A team of researchers, led by Mark Adams and Alexander Weiss of Edinburgh University, investigated personality and social structure in six species of macaque and found some interesting variation.

There are four main categories of social style, ranging from Grade 1 “despotic” to Grade 4 “tolerant”, depending on how strict or relaxed their female dominance hierarchies are.

Grade 1 species showed strong nepotism or favouritism towards kin and high ranking monkeys. These species include rhesus macaques, a species commonly used in laboratories and sent into space before humans, and Japanese macaques, which include the famous snow monkeys who soak in hot springs.

At the other end of the spectrum, the Grade 4 species showed more tolerance in social interactions between unrelated females. This includes Tonkean macaques, which are found in Sulawesi and the nearby Togian Islands in Indonesia, and Crested macaques, which are critically endangered.

(A wild crested macaque received international attention when he stole a wildlife photographer’s camera and then photographed himself. This could be an example of a “bold” and “curious” personality.)

In the middle of the social tolerance scale are the Grade 2 and 3 species. This includes Assamese macaques, which are sometimes found at high altitudes in Nepal and Tibet, and Barbary macaques, which include the infamous “apes” of Gibraltar (actually monkeys, not apes), who are often overweight and aggressive because tourists overfeed them.

Michelle Bender/Flickr, CC BY-NC-ND

Personality differences between macaque species

Interestingly, the individual species of macaques didn’t all have the same personality factors. The Japanese, Barbary, crested and Tonkean macaques had only four, while the Assamese had five, and rhesus monkeys had six factors.

All of the species exhibited the dimension of Friendliness. This seems to be a personality factor unique to macaques, and is a blend of chimpanzee Agreeableness and human Altruism.

Tonkean macaques also had a Sociability personality factor. Just like chimpanzees and humans, this species of macaque uses affiliative contacts (i.e. friendship) to reinforce bonds. Only crested macaques did not show the personality factor of Openness (i.e. curiosity), usually found in humans and other primates. The factors Dominance and Anxiety were found for rhesus and Japanese macaques.

The old and the new

The study also showed the fascinating connections between personality and social style. Grade 1 despotic species – Japanese and rhesus macaques – were rather similar, and so were Grades 2, 3 and 4, including the more tolerant species such as Assamese, Tonkean and crested macaques.

On the evolutionary scale, African primates, such as the African Barbary macaque, are “older”. Therefore, they represented the “ancestral” social behaviours for macaques.

Barbary macaque personality has a Dominance/Confidence factor, which is related to social assertiveness, an Opportunism factor, which relates to aggression and impulsivity, a Friendliness factor, relating to social affiliation, and an Openness factor, relating to curiosity and exploratory behaviour.

Rhesus and Japanese macaques, on the other hand, are “younger” on the evolutionary scale. Therefore, the Dominance and Anxiety factors seen in these species must have evolved later.

jinterwas/Flickr, CC BY

Understanding the personality of an individual animal or species can help in animal management and welfare. Rhesus macaques, for example, display an Anxiety personality factor. These monkeys are also most commonly used in bio-medical laboratory research. Knowing that some individuals may be prone to anxiety means that researchers must make extra efforts to alleviate any potential distress.

The findings that some Barbary macaques may be especially socially assertive, aggressive, impulsive, curious and exploratory may also help us convince tourists to keep their distance from these monkeys in Gibraltar to avoid conflicts!

Such studies of animal personality also shed light on our own personality dimensions. Our lack of a Dominance factor suggests that our ancestral environment was perhaps more egalitarian and less characterised by high social stratification, which is also borne out by anthropological and palaeontological studies.

Ultimately, we can learn a lot from our primate cousins, not only about their personalities, but about personality itself – not to mention learning a thing or two about ourselves and the social environment in which we evolved.

![]()

Carla Litchfield is Lecturer, School of Psychology, Social Work and Social Policy at University of South Australia.

This article was originally published on The Conversation.

Read the original article.

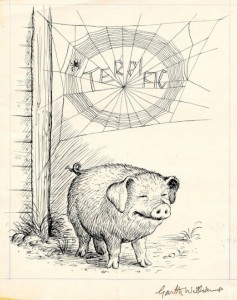

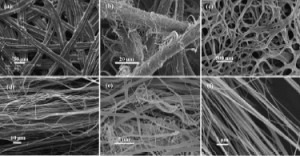

n and run along without becoming entangled. The elasticity and strength of the web are partly why it is so easy for another species to become ensnared. Researchers who have taken the time to examine closely have realized in awe the potential for application in spaceflight, industrial, commercial and even fashion industries.

n and run along without becoming entangled. The elasticity and strength of the web are partly why it is so easy for another species to become ensnared. Researchers who have taken the time to examine closely have realized in awe the potential for application in spaceflight, industrial, commercial and even fashion industries.