Without cooling, the supply of food, medicine and data would simply break down. We consume large amounts of energy and cause a great deal of pollution keeping things cool yet compared to electricity, transport or heat, cold has received very little attention in the energy debate; neither the UK, USA nor the EU yet has an explicit policy on cold.

Global demand is booming – and incremental efficiency improvements are unlikely to contain the resulting environmental damage. We need radically new technology.

In rapidly developing nations investment in cooling is starting to boom as rising incomes, urbanisation and population growth boost demand. But the industry remains rudimentary and has enormous headroom to grow: in India, just 4% of fresh produce is transported in refrigerated vehicles currently compared to more than 90% in the UK; China has an estimated 66,000 refrigerated vehicles to serve a population of 1.3 billion, whereas France has 140,000 for 66 million. But these disparities seem unlikely to persist. India projects that it needs to spend more than US$15 billion on the cold chain over the next five years.

For industrial use, cold must generally be maintained through a whole supply chain – think of how seafood can remain frozen from trawler to supermarket. We call this the cold chain.

Brian Smith, CC BY

Cold chain growth is currently based on diesel-powered technologies that produce grossly disproportionate emissions of nitrogen oxides (NOx) and particulate matter (PM). The fridge you might find on a supermarket home delivery van consumes up to 20% of the vehicle’s diesel, but emits up to six times as much NOx and 29 times as much PM as the engine. It also uses HFC refrigerants harmful to the atmosphere.

At the same time, however, vast amounts of cold are wasted, for example when liquid natural gas (LNG) is turned back into gas at import terminals. This cold could potentially be stored as liquid air or liquid nitrogen then recycled to reduce the cost and environmental impact of cooling in buildings and vehicles.

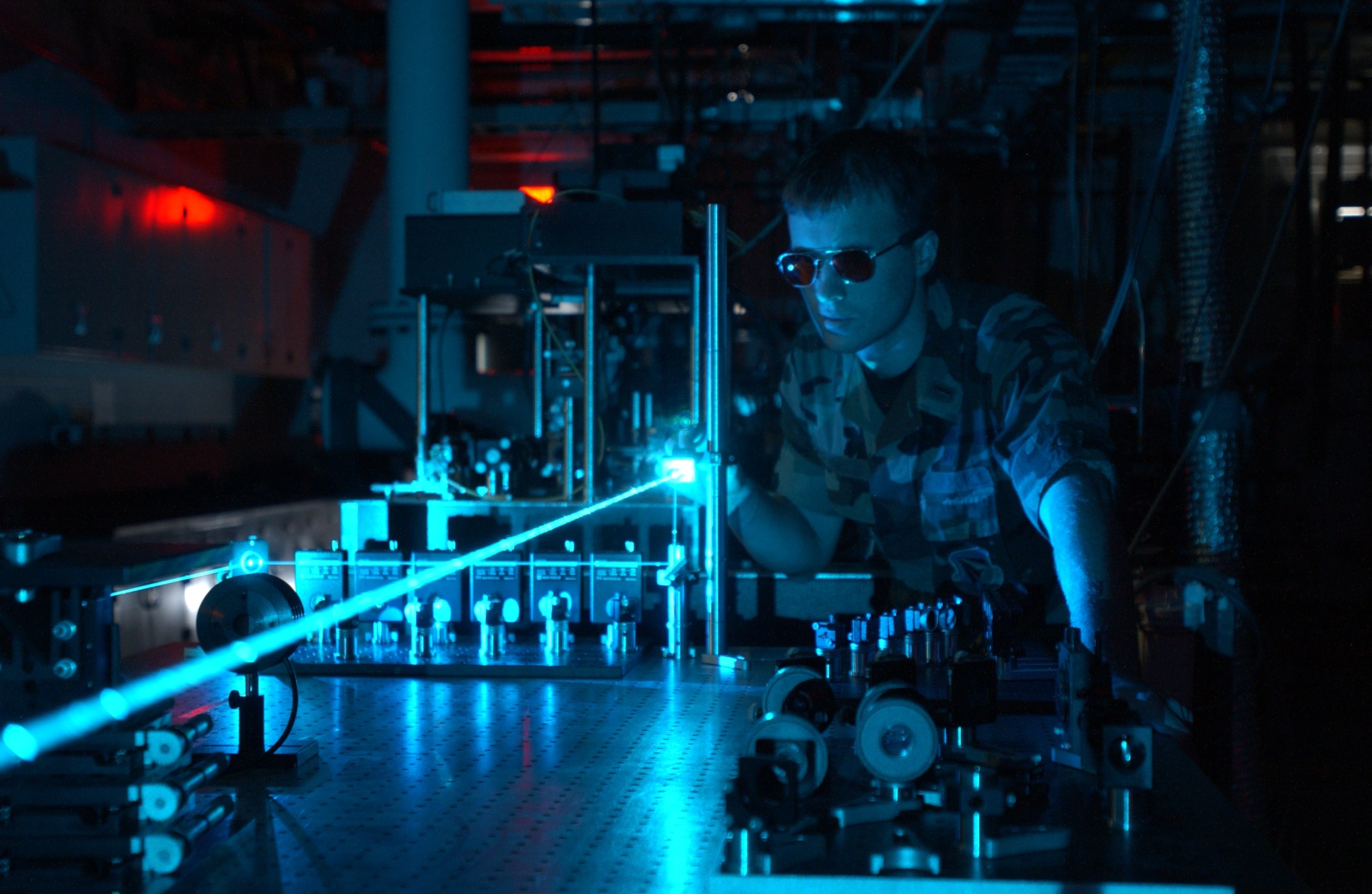

This insight has stimulated new thinking aimed at creating business and environmental value from the efficient integration of cold into the wider energy system, now known as the “cold economy”. The cold economy crucially involves the recycling of waste cold and “wrong-time” energy such as excess wind power generated at night when demand is low to provide, through novel forms of energy storage, low-carbon, zero-emission cooling and power.

Big changes in the energy market over the next decade will spur the adoption of tidal power, solar power, offshore wind and other novel technologies. This in turn will require far greater integration of different forms of energy generation and consumption – and it is increasingly clear this now means joining up not only heat, power and transport, but also cold.

This is an important opportunity: with the right support, the cold economy could develop into a large industry that simultaneously reduces greenhouse gas emissions, improves air quality and replaces environmentally destructive refrigerants with benign alternatives – as well as generating thousands of new manufacturing jobs.

The cold economy is the subject of a new policy commission entitled Doing Cold Smarter, launched by the University of Birmingham this month. It will assess not only how the growing demand for cooling can be met without causing environmental ruin, but also the potential benefits both in the UK and emerging markets.

What will we come up with? You’ll have to wait until the commission’s final report is published this autumn. But it should be full of thought provoking – even cool – ideas.

![]()

This article was originally published on The Conversation.

Read the original article.