Materials can be divided into two categories based on their ability to conduct electricity. Metals, such as copper and silver, allow electrons to move freely and carry with them electrical charge. Insulators, such as rubber or wood, hold on to their electrons tightly and will not allow an electrical current to flow.

In the early 20th century physicists developed new laboratory techniques to cool materials to temperatures near absolute zero (-273 °C), and began investigating how the ability to conduct electricity changes in such extreme conditions. In some simple elements such as mercury and lead they noticed something remarkable – below a certain temperature these materials could conduct electricity with no resistance. In the decades since this discovery scientists have found identical behaviour in thousands of compounds, from ceramics to carbon nanotubes.

We now think of this state of matter as neither a metal nor an insulator, but an exotic third category, called a superconductor. A superconductor conducts electricity perfectly, meaning an electrical current in a superconducting wire would continue to flow round in circles for billions of years, never degrading or dissipating.

Electrons in the fast lane

On a microscopic level the electrons in a superconductor behave very differently from those in a normal metal. Superconducting electrons pair together, allowing them to travel with ease from one end of a material to another. The effect is a bit like a priority commuter lane on a busy motorway. Solo electrons get stuck in traffic, bumping into other electrons and obstacles as they make their journey. Paired electrons on the other hand are given a priority pass to travel in the fast lane through a material, able to avoid congestion.

Superconductors have already found applications outside the laboratory in technologies such as Magnetic Resonance Imaging (MRI). MRI machines use superconductors to generate a large magnetic field that gives doctors a non-invasive way to image the inside of a patient’s body. Superconducting magnets also made possible the recent detection of the Higgs Boson at CERN, by bending and focusing beams of colliding particles.

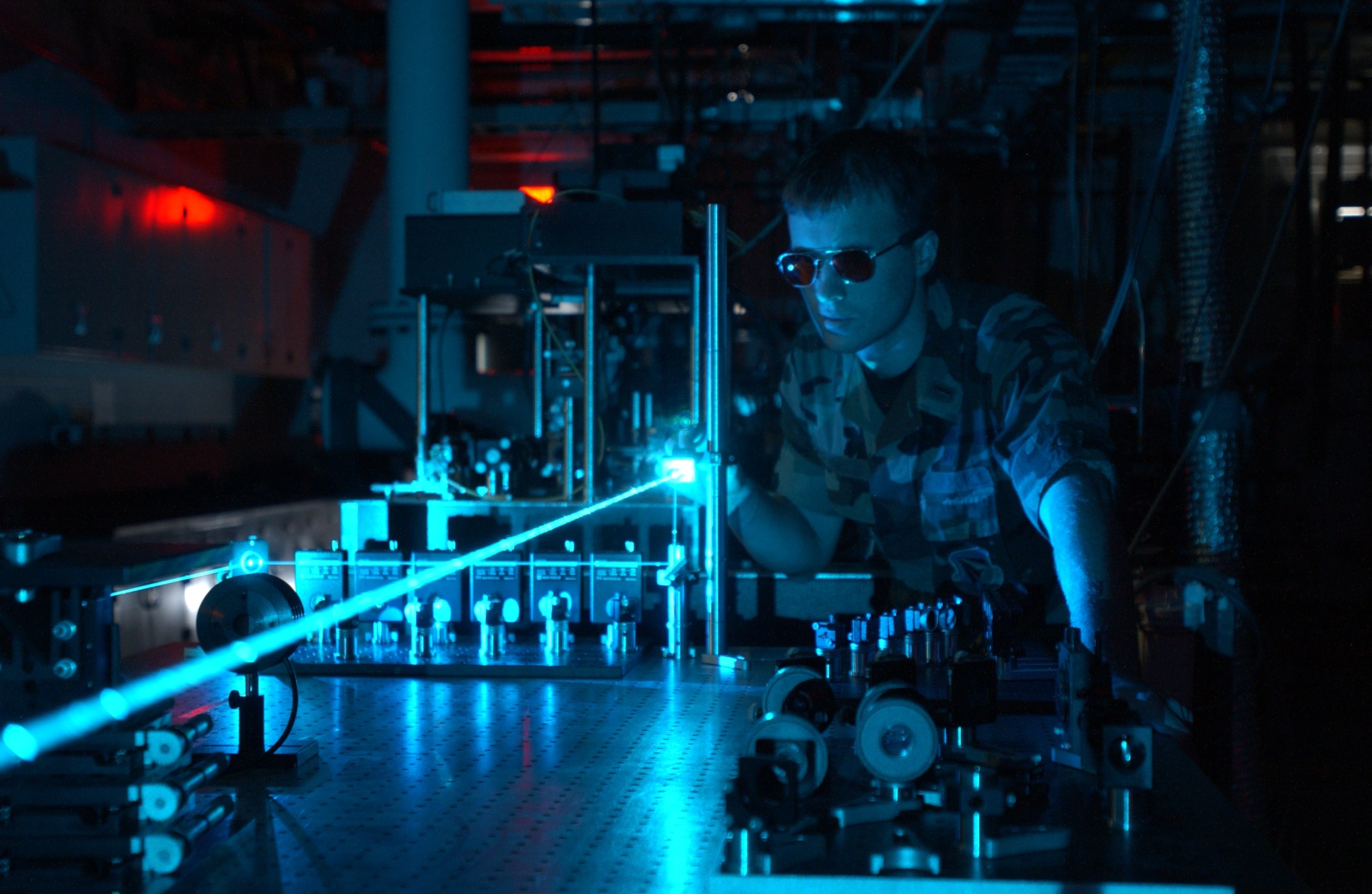

Jan Ainali, CC BY

One interesting and potentially useful property of superconductors arises when they are placed near a strong magnet. The magnetic field causes electrical currents to spontaneously flow on the surface of a superconductor, which then give rise to their own, counteracting, magnetic field. The effect is that the superconductor dramatically levitates above the magnet, suspended in the air by an invisible magnetic force.

What prevents more widespread use of these materials is the fact that the superconductors we know about operate only at very low temperatures. In the simple elements for instance superconductivity dies out at just 10 Kelvin, or -263 °C. In more complicated compounds, such as yttrium barium copper oxide (YBa2Cu3O7), superconductivity may persist to higher temperatures, up to 100 Kelvin (-173 °C). While this is an improvement on the simple elements, it is still much colder than the coldest winter night in Antarctica.

Scientists dream of finding a material where superconductive properties can be used at room temperature, but it’s a challenging task. Turning up the temperature tends to destroy the glue that binds the electrons into superconducting pairs, which then returns a material back to its boring metallic state. One of the great challenges in the field arises from the fact that we don’t yet understand very much about this glue, except in a few limited cases.

From superatom to superconductor

New research from the University of Southern California has taken a novel step towards improving our understanding of how superconductivity arises. Rather than study superconductivity in bulk materials like wires, Vitaly Kresin and his coworkers have managed to isolate and examine small clumps of a few dozen aluminium atoms at a time. These tiny clusters of atoms can act like a “superatom”, sharing electrons in a way that mimics a single, giant atom.

What is surprising is that measurements of these clusters reveal what may be the signature of electron pairing persisting all the way up to 100 kelvin (-173 °C). This is still a frosty temperature of course, but it is 100 times higher than the superconducting temperature of a piece of aluminium wire. Why does a small handful of atoms superconduct at a much higher temperature than the millions of atoms that form a wire? Physicists have some ideas but the effect is largely unexplored, and it might prove an interesting way forward in the quest for superconductivity at higher temperatures.

Shutterstock

Hoverboards anyone?

If physicists were able to achieve the goal of room temperature superconductivity in a material that was easy to fashion into wires, important new technologies would soon follow. For starters, devices which use electricity would become considerably more efficient and consume less power.

Transporting electricity over long distances would also become much easier, which is particularly useful for renewable energy applications – and some have proposed giant superconducting cables linking Europe with solar energy farms in North Africa.

The fact that superconductors will levitate above a strong magnet also creates possibilities for efficient, ultra-high speed trains that float above a magnetic track, much like Marty McFly’s hoverboard in Back to the Future. Japanese engineers have experimented with replacing the wheels of a train with large superconductors that hold the carriages a few centimetres above the track. The idea works in principle, but suffers from the fact that the trains need to carry expensive tanks of liquid helium with them in order to keep the superconductors cold.

Many superconducting technologies will probably remain on the drawing board, or too expensive to implement, unless a room temperature superconductor is discovered. It’s just possible however that the advances made by Kresin’s group might mark a milestone on this journey.

![]()

This article was originally published on The Conversation.

Read the original article.