Quantum theory can be misinterpreted to support false claims.

There is legit science to quantum theory but misinterpretations justify an assortment of pseudoscience. Let’s examine why.

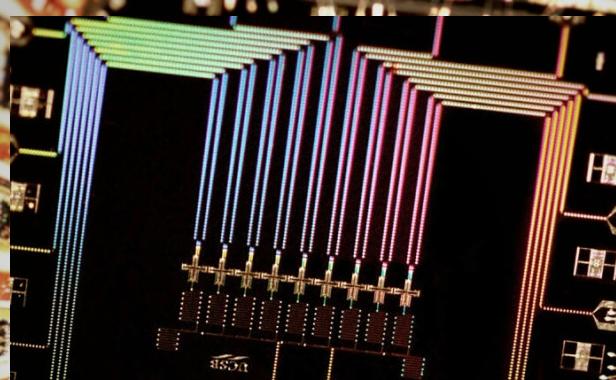

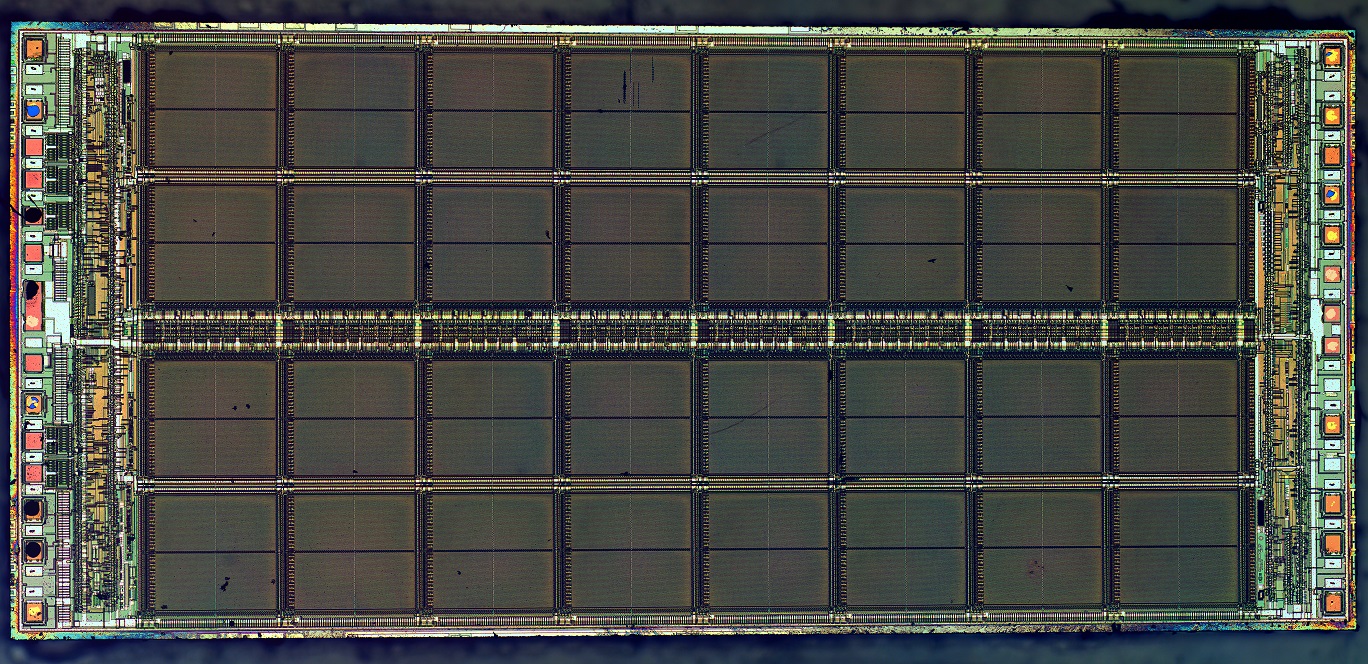

Quantum science isn’t a young science anymore. This year, 2015, the term “quantum”, as it relates to quantum physics, turns 113 years old. The term as we know it first appeared “in a 1902 article on the photoelectric effect by Philipp Lenard, who credited Hermann von Helmholtz for using the word in reference to electricity”(Wikipedia). During it’s first century of life attempts to understand quantum particle behavior have lead to a bunch of discoveries. Quantum physics has furthered understanding of key physical aspects of the universe. That complex understanding has been used to develop new technologies.

Quantum physics is enigmatic in that it pushes the limits of conceptualization itself, leaving it seemingly open to interpretation. While it is has been used to predict findings and improve human understanding, It’s also been used by charlatans who have a shaky-at-best understanding of science. Quantum physics has been misappropriated to support a bunch of downright unscientific ideas.

It’s easy to see why it can be misunderstood by well-intentioned people and foisted upon an unsuspecting public by new age hacks. The best minds in academia don’t always agree on secondary implications of quantum physics. No one has squared quantum theory with the theory of relativity, for example.

Most people are not smart enough to parse all the available research on quantum physics. The public’s research skills are notoriously flawed on any subject. The internet is rife with misinformation pitting researchers against their own lack of critical thinking skills. Anti-science and pseudoscience alike get a surprising amount of traction online, with Americans believing in a wide variety of superstitions and erroneous claims.

In addition to the public simply misinterpreting or misunderstanding the science, there is money to be made in taking advantage of gullible people. Here are some false claims that have erroneously used quantum theory as supporting evidence:

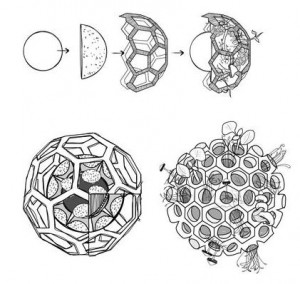

Many Interacting Worlds

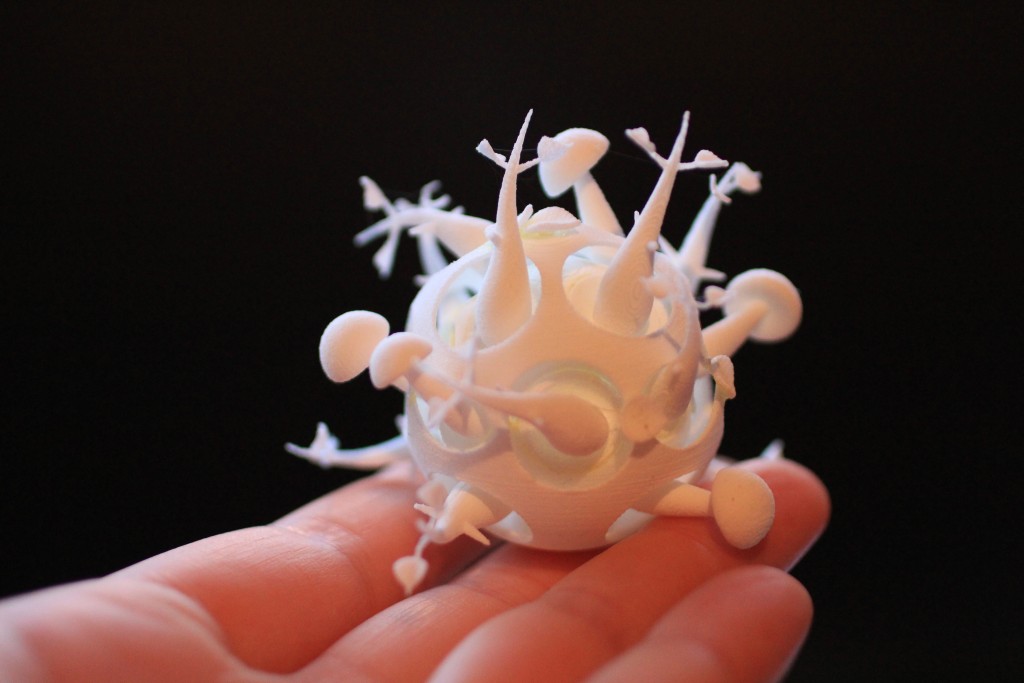

The internet loves this one. Contemporary multiple universe theor ies are philosophy, not science, but that didn’t stop Australian physicists Howard Wiseman and Dr. Michael Hall from collaborating with UC Davis mathematician Dr. Dirk-Andre Deckert to publish the “many interacting worlds” theory as legit science in the otherwise respectable journal, Physical Review X. This is the latest in a train of thought that forgoes scientific reliance on evidence and simply supposes the existence of other universes, taking it a step further by insisting we live in an actual multiverse, with alternate universes constantly influence each other. Um, that’s awesome but it’s not science. You can read their interpretation of reality for yourself.

ies are philosophy, not science, but that didn’t stop Australian physicists Howard Wiseman and Dr. Michael Hall from collaborating with UC Davis mathematician Dr. Dirk-Andre Deckert to publish the “many interacting worlds” theory as legit science in the otherwise respectable journal, Physical Review X. This is the latest in a train of thought that forgoes scientific reliance on evidence and simply supposes the existence of other universes, taking it a step further by insisting we live in an actual multiverse, with alternate universes constantly influence each other. Um, that’s awesome but it’s not science. You can read their interpretation of reality for yourself.

Deepak Chopra

Deepak Chopra is a celebrated new age guru whose views on the human condition and spirituality are respected by large numbers of the uneducated. By misinterpreting quantum physics he has made a career of stitching together a nonsensical belief system from disjointed but seemingly actual science. Chopra’s false claims can seem very true when first investigated but has explained key details that Chopra nonetheless considers mysterious.

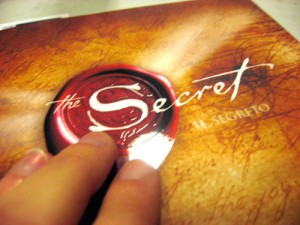

The Secret™

‘The Power’ and ‘The Secret’ are best-selling books that claim science supports what can be interpreted as an almost maniacal selfishness. The New York Times once described the books as “larded with references to magnets, energy and quantum mechanics.”

The Secret’s author, Rhonda Byrne, uses confusing metaphysics not rooted in any known or current study of consciousness by borrowing heavily from important-sounding terminology found in psychology and neuroscience. Byrne’s pseudoscientific jargon is surprisingly readable and comforting but that doesn’t make the science behind it any less bogus.

Scientology

There isn’t anything in quantum physics implying a solipsism or subjective experience of reality but that doesn’t stop Scientology from pretending we each have our own “reality” – and yours is broken.

Then there is the oft-headlining, almost post modern psuedoscientific masterpiece of utter bullshit: Scientology.

Scientology uses this same type of claim to control it’s cult following. Scientology relies on a re-fabrication of the conventional vocabulary normal, English-speaking people use. The religion drastically redefined the word reality. L.R. Hubbard called reality the “agreement.” Scientologists believe the universe is a construct of the spiritual beings living within it. The real world we all share is, to them, a product of consensus. Scientology describes, for example, mentally ill people as those who no longer accept an “agreed upon apparency” that has been “mocked up” by we spiritual beings, to use their reinvented terminology. Scientologists misuse of the word reality to ask humans, “what’s your reality?” There isn’t anything in quantum physics implying a solipsism or subjective experience of reality but that doesn’t stop Scientology.

In conclusion…

The struggle to connect quantum physics to spirituality is a humorous metaphor for subjectivity itself.

If you find yourself curious to learn more about quantum theory you should read up and keep and open mind, no doubt. The nature of a mystery is that it hasn’t been explained. Whatever evidence that might be able to help humanity understand the way reality is constructed is not going to come from religion or superstition, it will come from science. Regardless of the claims to the contrary, quantum theory only points out a gap in understanding and doesn’t explain anything about existence, consciousness or subjective reality.

|

Jonathan Howard

Jonathan is a freelance writer living in Brooklyn, NY |