The field of robot morality is quickly gaining attention as experts are trying to change the problematic nature of a robot that simply follows instructions in a chain of command and cannot make decisions that would otherwise trump their superiors, as would a human nurse giving pain medication to a patient for example. Between computer scientists, psychologists, lawyers, linguists, philosophers, theologians and even (maybe most importantly) human rights experts, professionals from all these fields are attempting to identify the specific set of decision making points that robots have to overcome in order to emulate our own human perceptions of right and wrong in modern day society. As Robin Marantz Henig of the New York Times describes it, a robot nurse “must not hurt its human. The robot must do what its human asks it to do. The robot must not administer medication without first contacting its supervisor for permission. On most days, these rules work fine.” But what happens when a decision is made to go behind a supervisors back and break the chain of command and that decision ends up accidentally hurting the very human it’s attempting to help?

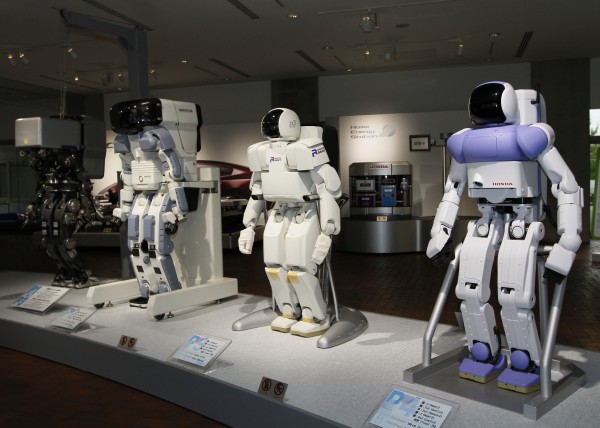

In the recent science fiction remake of Robocop, we are presented with a future that consists of robot police that are armed and programmed to kill if being fired upon. While the robots in the movie seem rather extravagantly designed, the real thing actually already exists, albeit in a rather primitive, grunt-like form. While WIRED news claimed that a real cyborg body suit like the Robocop is 100 years away due to its excessive need for long battery life, the fact remains that most of the tech for autonomous robots is already out there, so experts are concerned with how the moral and ethical issues that govern modern society are going to play out with such an experimental new frontier.